Introducing MidReal Morpheus Model

Andrew*, Haoran*, Kaijie*, Rio*, Troy, Shichen, Fuhao

Introduction

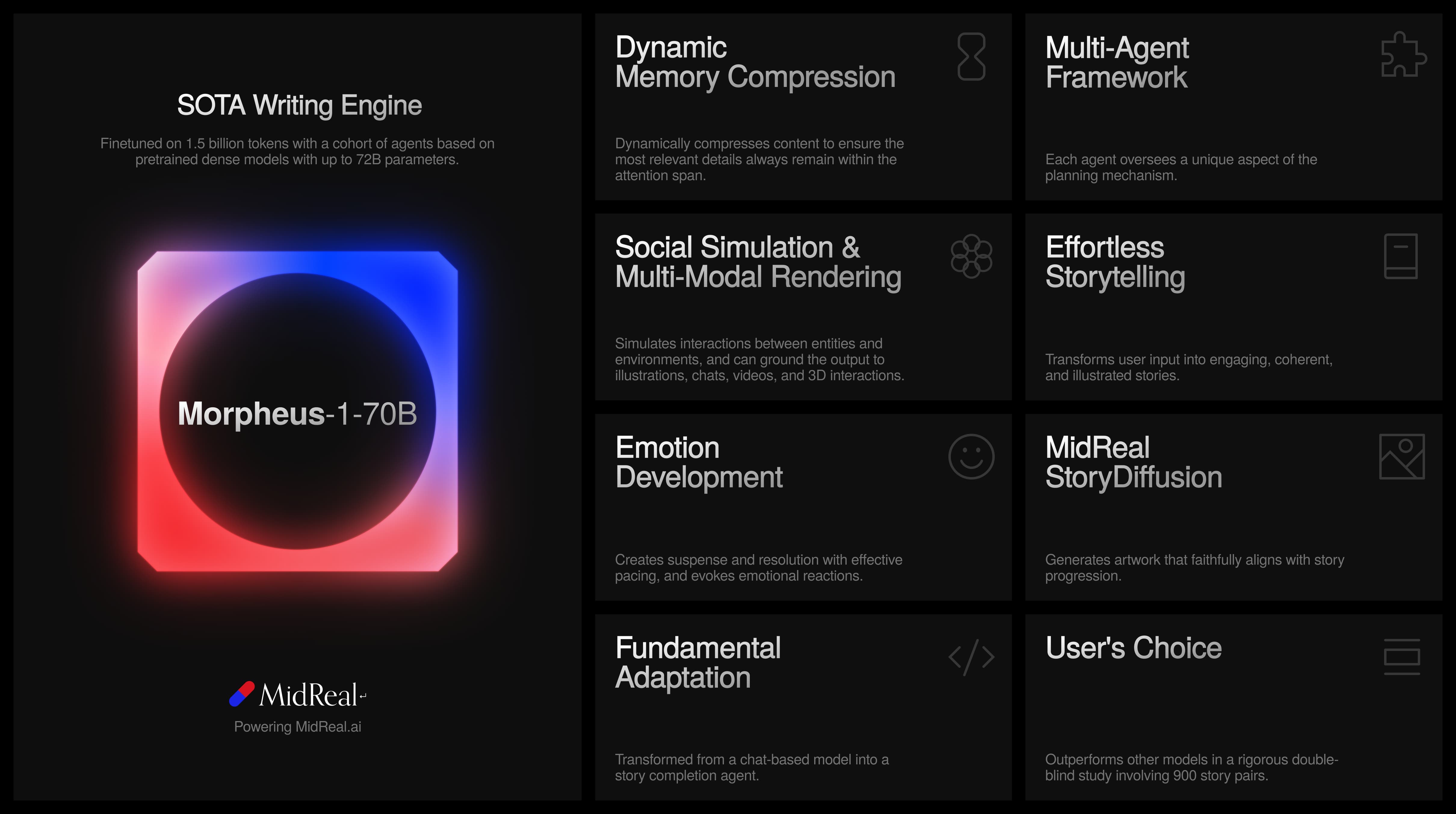

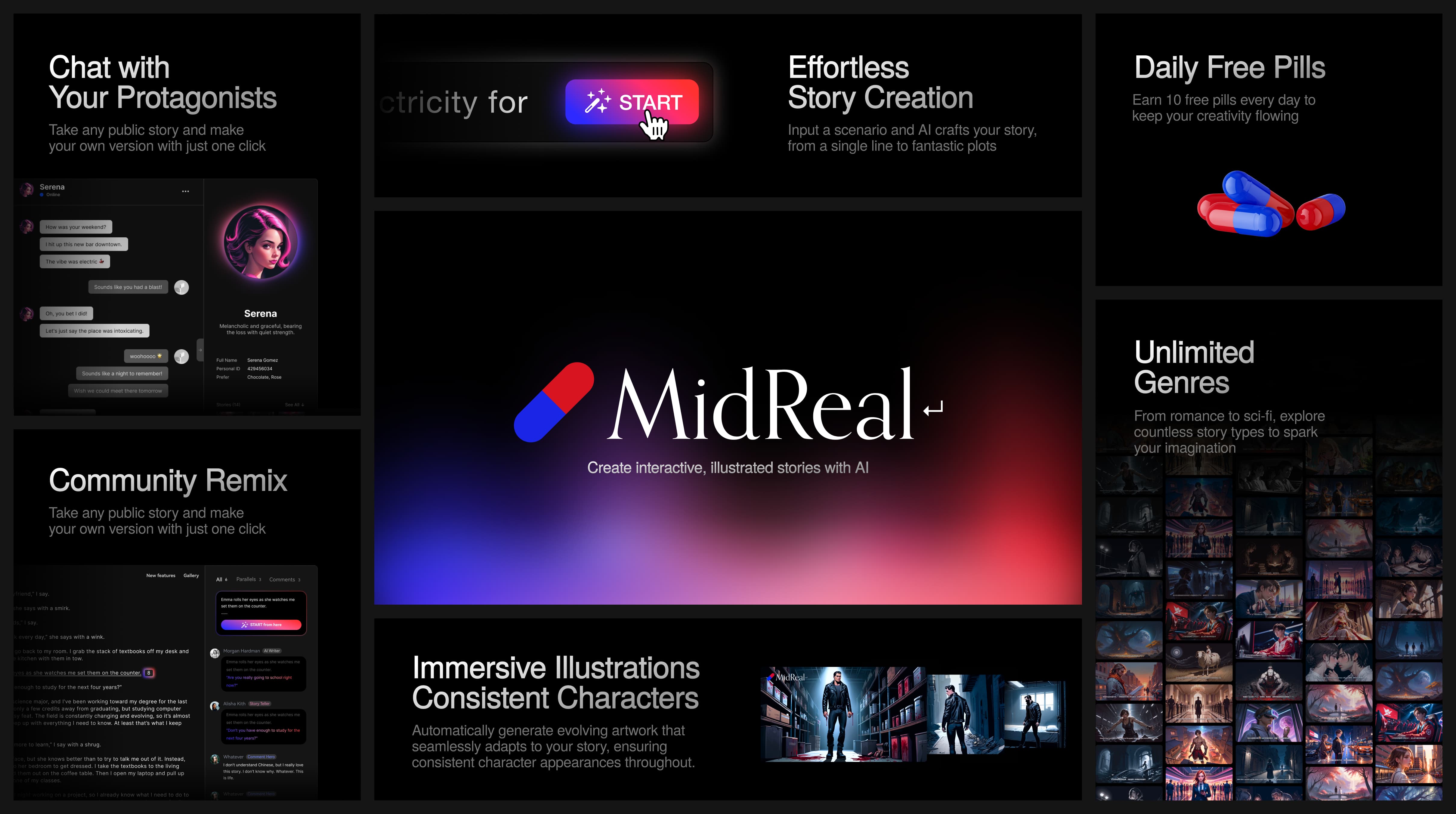

We introduce Morpheus-1-70B (Morpheus), a storytelling model that can create interactive and illustrative stories from text instructions. Specifically, Morpheus is a finetuned cohort of agents forming a narrative engine that generates engaging, coherent, and illustrated stories based on user input. In blind user tests, it has achieved state-of-the-art performance in story writing. Its commercial version is currently deployed on our story generation platform, MidReal (midreal.ai).

Much prior work has explored user-guided creative writing and illustration generation. Story generation frameworks like Re3, DOC, and RecurrentGPT have proven effective in increasing coherence, relevance, and interestingness in long-form storytelling. StoryDiffusion proposed consistent self-attention, enforcing character consistency across generated images and enabling long sequences of illustrations to accompany a story.

While these prior works have shown significant progress, they have not reached the level of performance required for direct production. Morpheus, a successor of these methods, is a generalist story and illustration generation model that is already serving over 100,000 creators on MidReal.

In this report, we demonstrate how Morpheus advances storytelling AI through (1) our methods and statistics for post-training Llama 3 (and other pretrained models), which form the foundation of our model, and (2) qualitative and quantitative evaluation of Morpheus's capabilities and limitations. Model and implementation details are not included in this report.

DOC: https://arxiv.org/abs/2212.10077

Re3: https://arxiv.org/abs/2210.06774

RecurrentGPT: https://arxiv.org/abs/2305.13304

StoryDiffusion: https://storydiffusion.github.io/

Receipe

Tree of Thoughts

We drew inspiration from Shunyu Yao's work on Tree of Thoughts, which organizes the problem-solving process into a tree structure. In this structure, nodes represent partial solutions, and edges represent operations that modify those solutions. This mimics human System 2 thinking, which is slow, deliberate, and conscious, achieving high reasoning ability to solve complex problems.

We discovered that the process of story writing is much like constructing a problem-solving tree. In creative writing, the input scenario serves as the root of the tree, with each subsequent layer of nodes expanding upon the previous layer to shape the story from coarse to fine. The finest outline is then expanded into the actual passage that the user reads.

Morpheus differs from Tree of Thoughts and DOC in that this process is specifically redesigned and finetuned for creative writing. We trained the model using publicly accessible data to learn the optimal strategy of node expansion. To balance the user's intention of redirecting the narrative mid-story, the quality of planning, and generation time, the tree-building process is dynamic, creating or rebuilding nodes as needed.

Dynamic Memory Compression

RecurrentGPT uses natural language to simulate a long-short term memory mechanism, storing and updating memory on a hard drive. At each timestep, it generates a paragraph and updates its memory on the hard drive. This approach allows for the generation of coherent, long-form text by maintaining context and continuity across multiple paragraphs.

Morpheus improves upon this memory mechanism by employing a more dynamic memory system. When writing the next paragraph of the story, all previously composed text is dynamically compressed, with the most relevant information (e.g., a foreshadowing detail) compressed the least. This method allows the model to always have the full story within its context, adjusting the compression ratio for different parts of the story according to the paragraph it plans to write next.

Agent Finetuning

FireAct proposed methods for finetuning large language models to create agents with increased performance. It demonstrated that even weaker models like Llama-2-7B could achieve a 77% increase in performance on HotpotQA through agent finetuning. This approach also offers cost, time, and robustness advantages during inference compared to traditional prompted agent architectures.

Morpheus adapts this method of agent finetuning. Specifically, the model is finetuned on ~1.5 billion tokens for entity generation, plot expansion, passage generation, and memory recall. The data comes from publicly accessible stories, separated into two languages and ten different styles. To achieve optimal finetuning results, the data is preprocessed into a tree format that matches the inference-time structure for the best performance.

MidReal StoryDiffusion

Models like StoryDiffusion and IP-Adapter have solved the issue of maintaining a consistent physical appearance of a character based on a single image. However, creating truly immersive illustrations requires more than visual consistency. There are two critical aspects: First, the illustrations must faithfully follow the story's progression, identifying the best scene to represent each snippet. Second, shot angles and types must be dynamically adjusted to provide cinematic depth to the visual narrative.

To achieve this, we created our own diffusion-based models and finetuned them with a transformer-based LLM to weave the illustration generation module into Morpheus. When generating a story, Morpheus first selects the illustration style. Then for each snippet, it automatically identifies the best scenes and generates illustrations with tailored shot angles and types while maintaining consistent character appearances. This approach allows Morpheus to create a rich and consistent visual experience.

Social Simulation and Multi-Modal Rendering

Morpheus not only constructs narrative trees but also creates a coherent list of semantics that guide story generation. This list includes the premise, background, characters, key objects, motifs, and other essential story elements. For each character, Morpheus also constructs a behavior model. Based on these semantics, Morpheus simulates the interaction between characters and environments while building the tree, much like a game engine simulation. This simulation allows Morpheus to be finetuned to ground the output in other modalities, including character chats, illustration series, videos, and even 3D interactions.

Emotion Development

To create engaging stories, Morpheus establishes suspense and resolution with effective pacing. We finetuned the model with enhanced pacing parameters to control the story's tempo. Pacing also includes the anticipated emotional reactions and the characters' goal progression in each passage.

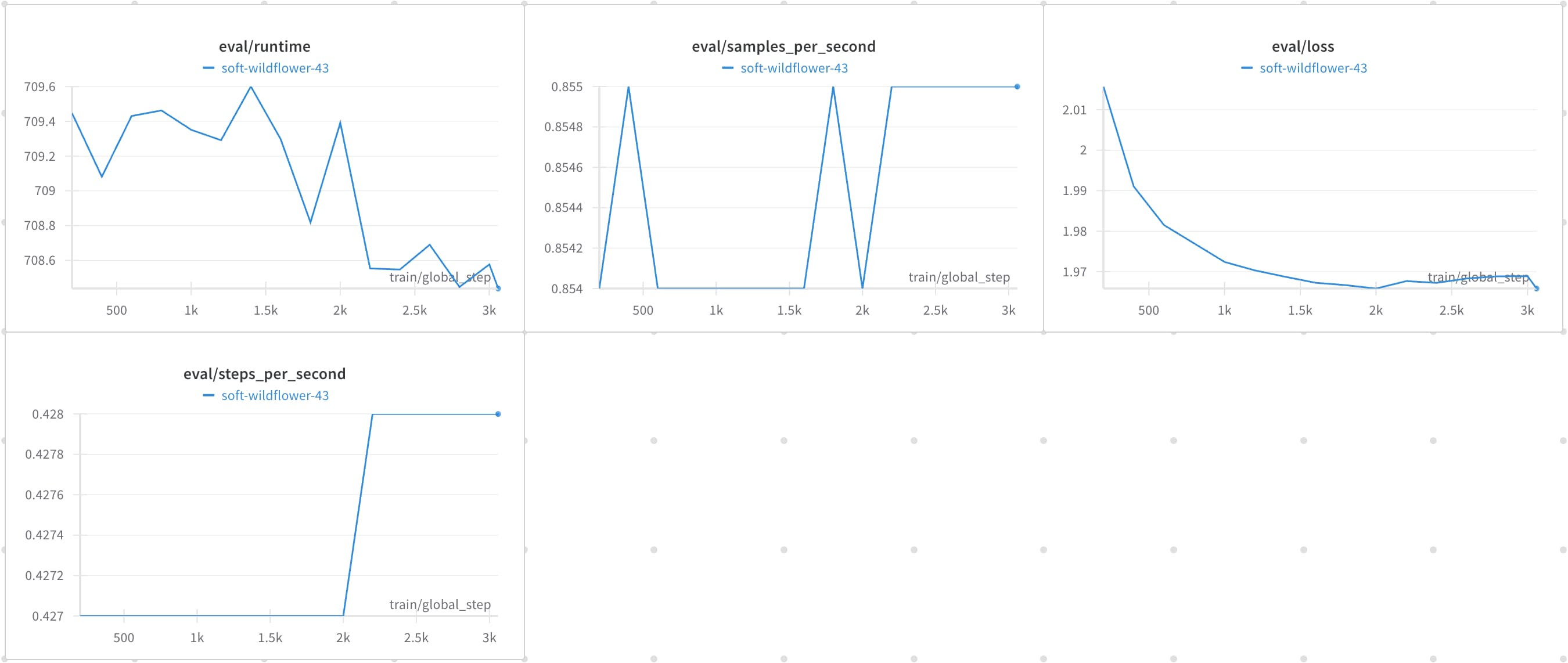

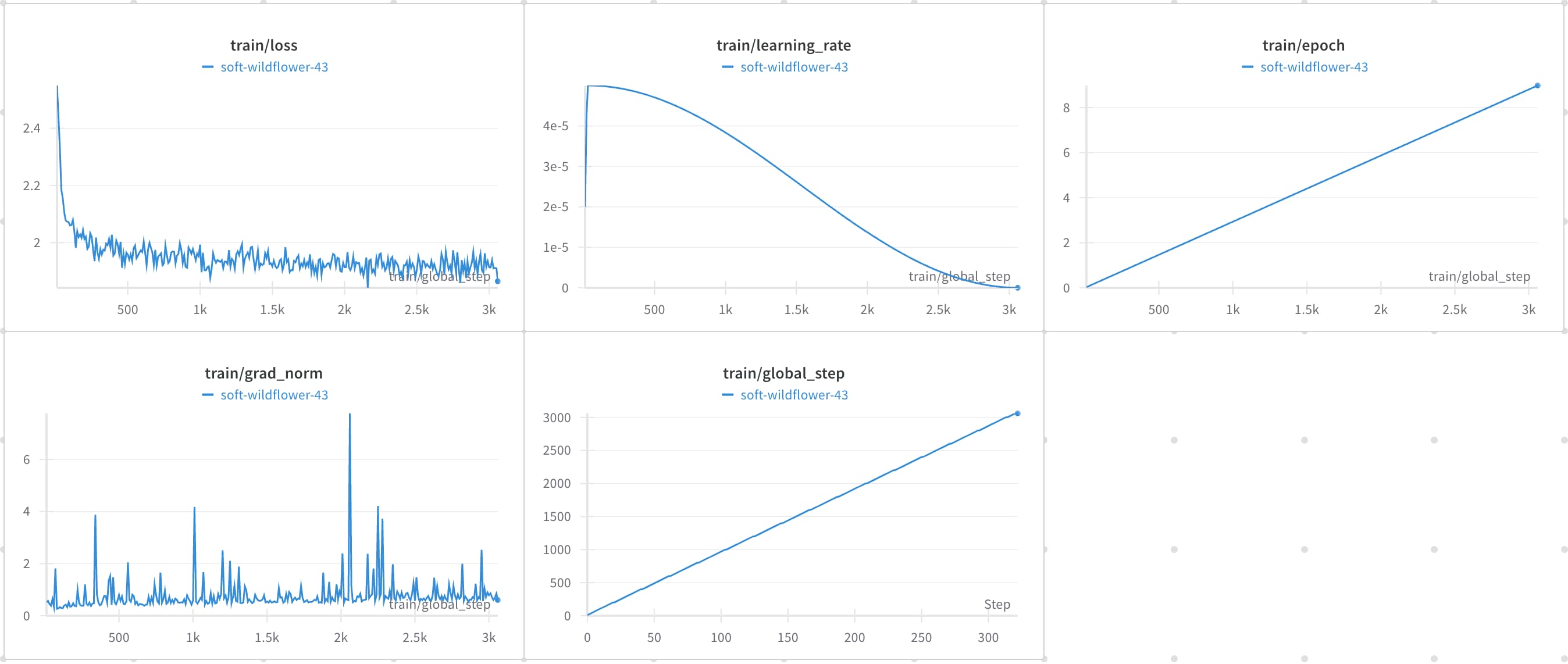

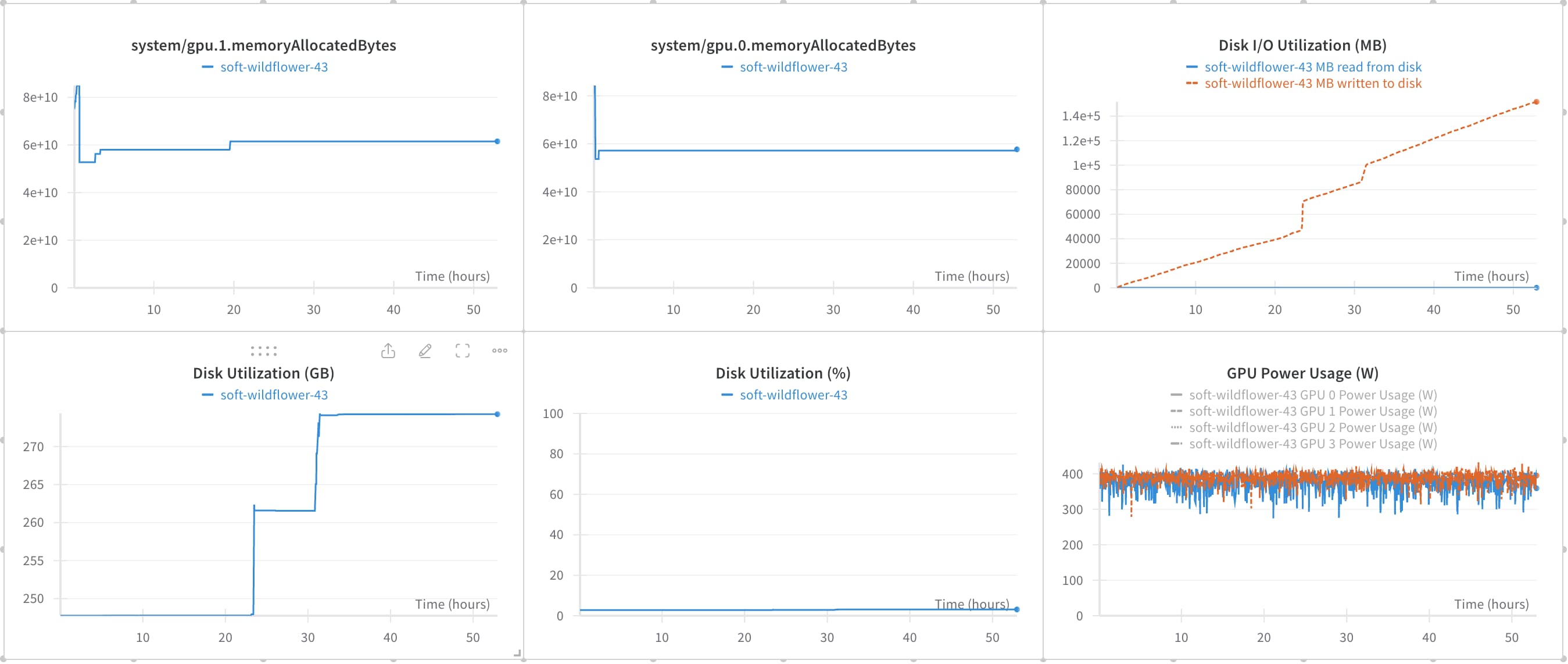

Training Statistics

Training configuration: AdamW optimizer with cosine learning rate scheduler, initial learning rate 5e-5. Batch size 8 per device, gradient accumulation steps 8, resulting in an effective batch size of 128. Maximum sequence length 8192. LoRA finetuning applied to q_proj and v_proj layers. Model parallelism using DeepSpeed's ZeRO stage 3 and fully sharded data parallel (FSDP) strategies. 4-bit quantization, FP16 precision, and Flash Attention used for memory efficiency. Trained for 7 epochs with a 10% validation split, evaluating every 100 steps and saving the best model at the end. Loss plotted during training. The experiment was conducted on a cluster equipped with NVIDIA A100/A800 GPUs, with each node containing 8 GPUs connected within the node via NVLink and NVSwitch, and nodes interconnected using InfiniBand.

Metrics

W&B Metrics

Figures below demonstrate w&b metrics of an example training job.

Human Evaluation

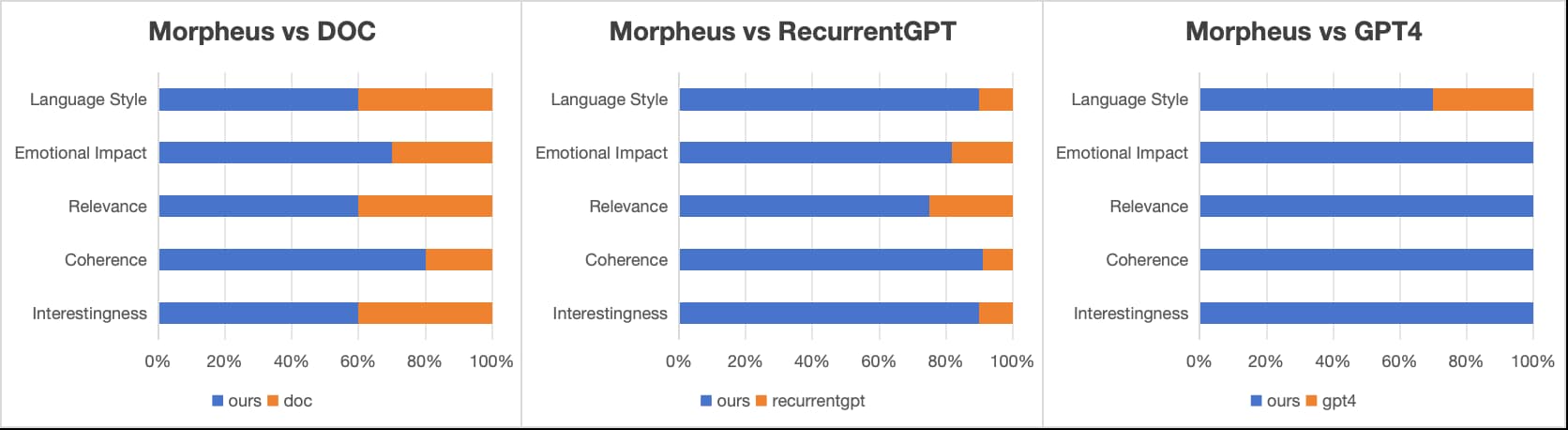

To rigorously assess the quality of stories generated by Morpheus, we established an evaluation framework comprising five distinct categories. Each category is designed to measure key aspects of narrative output that contribute to a satisfying reading experience:

-

Interestingness: The excerpt engages and captivates the reader.

-

Coherence: The excerpt flows logically and is easily understandable.

-

Relevance: The excerpt adheres to the provided premise.

-

Emotional Impact: The excerpt evokes an emotional response, whether it be excitement, tension, or surprise.

-

Language Style: The writing has high literary quality, with rhythm and flow that is pleasing to read.

We employed a double-blind study involving 900 story pairs. Each pair consisted of a story segment generated by Morpheus and a corresponding segment produced by a competing model. Evaluators were unaware of which segment was generated by which model to prevent bias in the assessment process.

The results, depicted in the figure below, illustrate that Morpheus significantly outperforms other models in four out of five categories. Notably, it excels in coherence, interestingness, emotional impact, and language style, confirming its ability to produce well-structured, engaging, and emotionally resonant stories with a high degree of literary finesse.

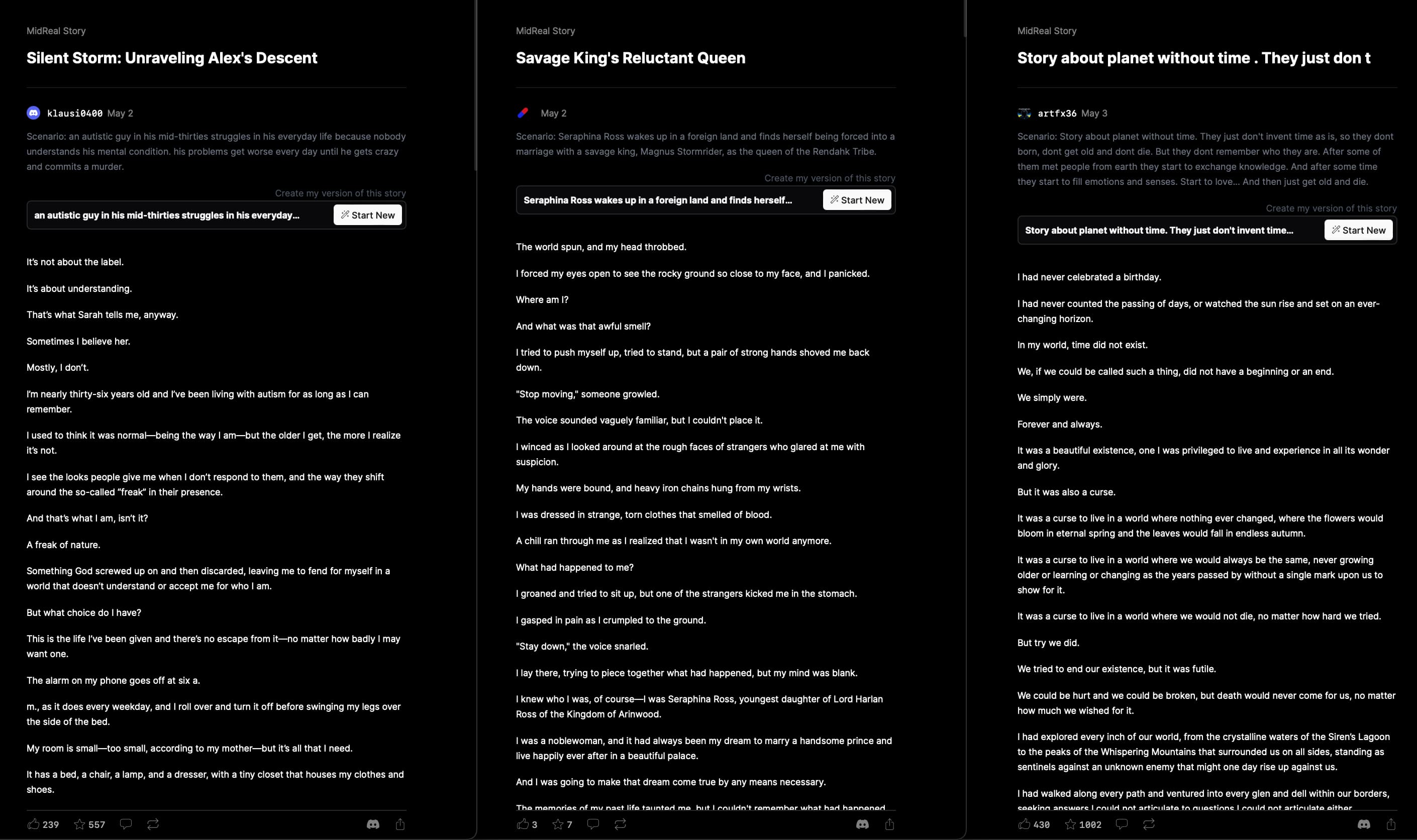

Examples

Here are examples of stories generated by Morpheus from our alpha users. While these stories may not yet match bestseller quality, they clearly demonstrate the potential of AI to write engaging, coherent narratives.

https://midreal.ai/s/eAzF

https://midreal.ai/s/eAp9

https://midreal.ai/s/eAsE

https://midreal.ai/s/eADe

Discussion

Storytelling vs Chat

Today, most large language models are trained as chat models, where they respond to user input as an assistant or any role the user defines. This approach is effective for building foundational services, allowing application developers to guide the model in desired directions.

Storytelling, however, is naturally a completion task rather than a chat task. Morpheus is an attempt to use post-training to erase the chat nature of the pre-trained model. By focusing on story completion and narrative coherence, Morpheus generates compelling stories based on user scenarios. In the future, we are exploring deeper ways to retrain the model to fundamentally adapt it for storytelling.

Agent with Post-Training vs Prompting

Traditionally, agents have been created through prompt-based frameworks. The Reasoning and Acting framework (ReAct), proposed by Shunyu Yao, was one of the first outstanding examples of a prompt-based agent. However, prompting agents have several drawbacks, including (1) slow speed due to repeated back-and-forth prompt interactions, (2) low robustness and (3) underdefined action policy because only a few examples can be provided in the prompt.

Morpheus, on the other hand, is a finetuned agent that's faster, more robust and more accurate. Larger datasets offer stronger learning support for the finetuned agent to derive more sophisticated policy to sample action from, and to adapt to all kinds of inputs and states. Post-training also makes repeated prompting unnecessary.

Post-Training with Open Weights Model vs Proprietary Model

In the early stages of our experiments, we used proprietary models. However, with the recent release of open-weight models like Llama 3 and Mixtral 8x22B, the landscape has shifted dramatically. We've observed that the performance gap between open and proprietary models is shrinking, while the development of post-training technologies that are applicable to open-weight models outpaced the finetuning methods offered by proprietary model platforms. We expect this trend to continue over the next 2-3 years, with post-training of open-weight models becoming more mainstream.

Vision

We believe storytelling holds the future of AI-based entertainment. While products like Character.AI have captured significant attention, chatbots are unlikely to represent the ultimate form of AI-based media.

We believe "story" is a more fundamental form of media. Whether manga, movie, or game, they all revolve around narratives involving protagonists and their interaction with their environments. By harnessing the power of storytelling, we expect to expand from a solid ground of narratives into higher modalities to provide more immersive experiences.

We are committed to lowering the execution barriers to writing, empowering anyone to become a qualified storyteller, and shaping a future where countless creative ideas and diverse media enter into an unprecedented blossom.

Future Directions

Moving forward, we aim to enhance Morpheus's storytelling capabilities by integrating more multi-modal rendering techniques. This will involve generating illustrations, videos, and 3D interactions that align with the evolving story. By refining memory compression and long-form planning, we will create stories with even greater depth and consistency.

In parallel, we plan to explore more extensive post-training methods for creating agents, allowing Morpheus to better capture user preferences and generate stories that resonate with diverse audiences. We will also investigate the potential for collaborative storytelling, enabling multiple users to shape a shared narrative while maintaining coherence and immersion.

In conclusion, Morpheus represents a significant leap forward in AI storytelling. By focusing on storytelling as a core application and leveraging the latest advancements in large language models and diffusion models, we believe Morpheus will redefine how people interact with stories, making interactive, personalized narratives accessible to everyone.

Acknowledgement

We gratefully acknowledge the resources and support provided by MIT, HKUST, SJTU and MidReal Team. We also extend our sincere thanks to Ciaran, Shunyu, Zhenbang, and Jianuo for their invaluable contributions to this work.